Welcome back,

Check out my exclusive Reddit where I share links to A.I. and futurism related articles from around the web including emerging tech, quantum computing and the singularity.

On 14th March, Google announced that it is working on a new medical AI system called Med-PaLM 2 at an annual event called “The Check Up.”

I’m really bullish on Google Health’s impact of their actual A.I. research and development. I recently reported on their Colorectal Cancer research, and their health-tech announcements and new health initiatives and partnerships of Google Health at its annual event called “The Check Up”.

You can watch the live-stream of the event of last week here (YouTube). The video below shows the announcement of Med-PaLM 2 starting at approximately 16:30.

But it’s not the device hardware upgrades that interested me, it was the Med-PaLM chatbot related to healthcare.

Recent progress in large language models (LLMs) — AI tools that demonstrate capabilities in language understanding and generation — has opened up new ways to use AI to solve real-world problems.

In an era where Generative A.I. claims to be changing industries, I think how we access healthcare information and advice really is impactful. While that may not be on ChatGPT, or Bard or Claude, it needs to be a specialized conversational A.I. agent trained on the right data.

What is Med-PaLM?

Med-PaLM is Google’s variant of the PaLM language model optimized for medical questions. The latest version is designed to answer medical questions reliably at an expert level.

PaLM stands for Pathways Language Model and I’m really encouraged with what Google is doing with it. This includes an API and MakerSuite.

Med-PaLM 2 is Very Much Improved

Google first introduced Med-PaLM late last year. It’s designed to provide high-quality responses to medical questions.

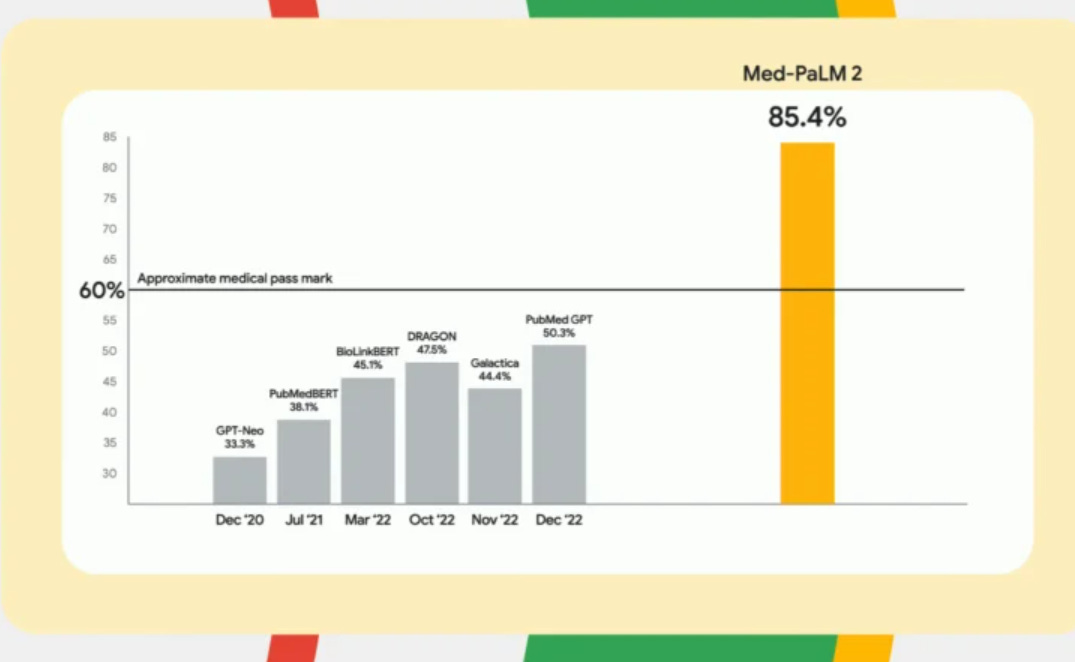

Med-PaLM was the first AI system to successfully receive a passing score, or more than a 60%, on multiple choice style questions similar to the ones used in U.S. medical licensing exams.

Google Health said the second iteration of the technology, Med-PaLM 2, consistently performed at an “expert” level on medical exam questions. Med-PaLM 2 has reached more than 85% accuracy, scoring 18% higher than its earlier results.

In some ways Med PaLM is a GPT-4 like chatbot for healthcare and our medical questions. Thus Google search could tap into this specialized language model.

The variety of products Google will release based on PaLM really will boggle the mind including a very good competitor to GitHub Copilot by Microsoft. Not to mention how much ChatGPT is augmenting how developers code and elevates the no-code movement.

PaLM will turn out to be way more impactful than GPT in my opinion, simply due to the variety of products Google will release in 2023 and 2024. Med-PaLM has always excited me. Google and DeepMind developed this artificial intelligence-powered chatbot tool called Med-PaLM designed to generate "safe and helpful answers" to questions posed by healthcare professionals and patients.

DeepMind is to Google what OpenAI is to Microsoft and then some. DeepMind does research that’s much more about the future of science and A.I. a bit along the same vein as Microsoft Research. But here is a case where Med-PaLM and DeepMind’s Sparrow could have a real impact.

Google unlike Microsoft also seems to prioritize doing their due diligence before releasing something. That is, Dr. Alan Karthikesalingam, a research lead at Google Health, said the company is also testing Med-PaLM’s answers against responses from real doctors and clinicians. He said Med-PaLM’s responses are evaluated for factual accuracy, bias, and potential for harm.

Google Health is thus at another level of R&D sophistication when it comes to working with the actual medical community. I like how careful Google is with this as well:

Given the sensitive nature of medical information, Karthikesalingam said it could be a while before this technology is at the fingertips of the average consumer.

While GPT-4 can perform well on any number of tests I just don’t find it as salient as Med-PaLM 2 really in context. Google will continue to work with researchers and experts on Med-PaLM and if I had to guess I’d say consumers might be able to try it out in Beta in 2024.

I was not a fan of how Google was allowed to acquire Fitbit or what they have done with the product and I’d trust Apple more in general with any sensitive data, Google’s research in healthcare always feels very salient. Google Health is on a good trajectory.

So just that Med-PaLM 2 achieves an 18 percent increase in performance over its predecessor and is well above the level of comparable language models in medical tasks is a reminder of how good Google is going to be with how large language models find real consumer usage in the future for very important factual information.

While Med-PaLM is a good idea, it’s exceedingly hard to get just right. Med-PaLM 2 was tested against 14 criteria, including scientific factuality, accuracy, medical consensus, reasoning, bias, and harm, evaluated by clinicians and non-clinicians from diverse backgrounds and countries.

The first iteration of Med-PALM was based on the HealthsearchQA dataset consisting of 3,375 frequently asked consumer questions. It was collected by using seed medical diagnoses and their related symptoms. This model was developed on PaLM, a 540 billion parameter LLM, and its instruction-tuned variation Flan-PaLM to evaluate LLMs using MultiMedQA.

Google Health also reported that building on years of health AI research, they’re working with partners on the ground to bring the results of our research on tuberculosis (TB) AI-powered chest x-ray screening into the care setting.

PaLM API, Makersuite, Med-PaLM 2 and their GitHub Copilot competitor are some of the things Google is doing with Generative A.I. that most interest me. We are brainwashed into thinking GPT is the real deal, but it’s the variety and complementary foundational models that’s the really impressive thing about the Generative A.I. movement.

Interesting article. First let me say I don't have a working knowledge of this Google product. However, I would be interested to know if Google embeds healthcare DEI or ESG biases? Such as healthcare disparity recognition. If it's only a development platform probably not. But if it comes with built in assumptions in its model I bet it does. My understanding is that it does this in most of its products. At best, shadow banning conservative ideas or ideas that don't fit their social justice narrative. Would be interesting to get some feedback on this assumption.