Hey Everyone,

Meta’s ability to frame some of its FAIR projects is starting to get more interesting in early 2024.

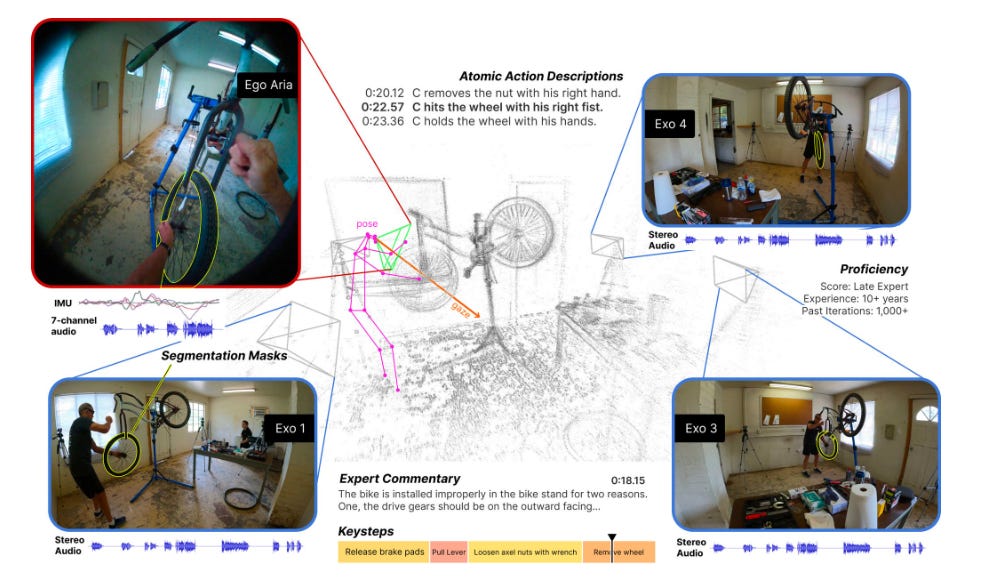

How will AI improve in visual learning? In short, Ego-Exo4D is an initiative that aims to advance AI understanding of human skill by building the first of its kind video dataset and benchmark suite in collaboration with the Ego4D university consortium.

How will the Metaverse interact with AI in a positive way for people? I’ve been thinking a lot about this. Recently Meta released something along these lines. It’s also about AI’s ability to learn and Visual learning in general.

Meta's Ego-Exo4D can also potentially make skilled physical tasks teachable through augmented reality. Or at least it’s hoping it can.

Introducing Ego-Exo4D: A foundational dataset for research on video learning and multimodal perception.

Meta wants be the future marketplace leader for teaching physical skills, leveraging their $2 billion acquisition of Oculus VR and the fact that over half the world already uses a Meta product daily.

As the result of a two-year collaborative effort by FAIR, Meta’s Project Aria, and 15 university partners, they recently released Ego-Exo4D. This work could help to advance AI's understanding of complex human skills & enable new applications for AR systems, robotics & more.

I actually think it might be more useful for AI and robotics, than the Metaverse per se, but Meta needs to drum up that aspect for its own self-interests.

Learn more about this foundational dataset for research on video learning and multimodal perception ➡️ Learn more.

As multi-modal AI evolves, AI learning visually will be really important for how LLMs continue to evolve and become more useful to people.

Ego-Exo4D presents three meticulously synchronized natural language datasets paired with videos.

Expert commentary, revealing nuanced skills.

Participant-provided narrate-and-act descriptions in a tutorial style. (

One-sentence atomic action descriptions to support browsing, mining the dataset, and addressing challenges in video-language learning.

Datasets like these are only going to get better.

Learn more about the work

Access the dataset

How Will AI Change Education?

Will spatial computing and the Metaverse impact how we learn in the coming generations?

The data set was released one month ago:

The dataset features over 1,400 hours of videos of skilled human activities collected across 13 cities by 800+ research participants.

Using Meta’s Project Aria, the dataset also includes:

• Time-aligned seven-channel audio

• IMU

• Eye gaze

• Head poses

• 3D point clouds of the environment

This work was made possible by collaboration between FAIR, Meta’s Project Aria and 15 university partners.

The need for traditional classrooms, online courses, tutors, or skilled laborers could become obsolete. Instead, AI equipped with any vision capability along with VR (or AR devices as simple as a smartphone with a camera) could facilitate skill learning. For example, by pointing your camera at a car, AI could guide you step-by-step through an oil change, including identifying specific bolts to turn. Any task is at stake here, from cooking to heart surgery. The task's complexity matters less than the AI's training on proper task execution data.

Meta is making the data from this project publicly available this month (February, 2024).

This is an example of how AI and multi-modal AI will continue to get better.

This also meant a huge collaboration with students as you can see from its YouTube videos.

Go Deeper

Read the paper for a complete introduction

Read the Overview page describing a summary of what's in the dataset

Learn about the Annotations

Learn about the Benchmarks

Watch a Video Introduction of the Dataset

Explore the Dataset with the Visualization Tool

Visit the Forum or Contact us to ask questions and report issues with the data or related codebases.

Read the Paper

The paper is 76 slides long and was first released in late November, 2023.

Keep reading with a 7-day free trial

Subscribe to Artificial Intelligence Learning 🤖🧠🦾 to keep reading this post and get 7 days of free access to the full post archives.