What is Hippocratic AI's Polaris?

Polaris: A Safety-focused LLM Constellation Architecture for Healthcare

Hey Everyone,

As I cover Generative AI in healthcare I have taken a special interest in what Hippocratic AI is doing.

In a paper submitted on March 20th, 2024 the researchers explain Polaris, is the first safety-focused LLM constellation for real-time patient-AI healthcare conversations.

Jensen Huang, Nvidia’s CEO, presented a 2-hour keynote on Monday that briefly spotlighted (YouTube) the work at Hippocratic AI, which published a preprint on what they call Polaris, “the first safety-focused large language model constellation for real-time patient-AI healthcare conversations.” So why is this exciting? Hippocratic AI explains:

“Unlike prior LLM works in healthcare focusing on tasks like question answering, our work specifically focuses on long multi-turn voice conversations. Our one-trillion parameter constellation system is composed of several multibillion parameter LLMs as co-operative agents: a stateful primary agent that focuses on driving an engaging conversation and several specialist support agents focused on healthcare tasks performed by nurses to increase safety and reduce hallucinations. “

They go on:

We develop a sophisticated training protocol for iterative co-training of the agents that optimize for diverse objectives. We train our models on proprietary data, clinical care plans, healthcare regulatory documents, medical manuals, and other medical reasoning documents.

We align our models to speak like medical professionals, using organic healthcare conversations and simulated ones between patient actors and experienced nurses. This allows our system to express unique capabilities such as rapport building, trust building, empathy and bedside manner.

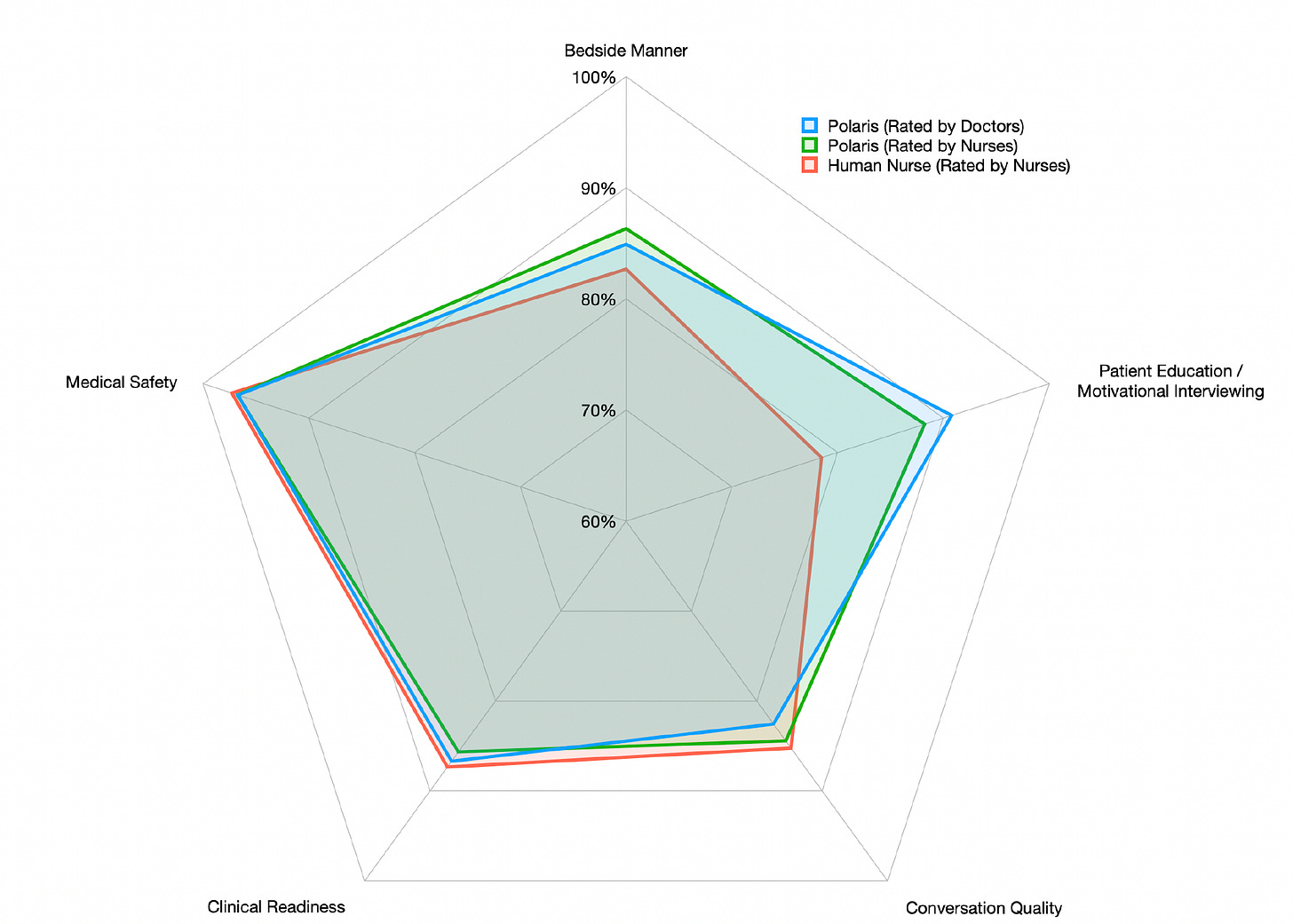

Finally, we present the first comprehensive clinician evaluation of an LLM system for healthcare. We recruited over 1100 U.S. licensed nurses and over 130 U.S. licensed physicians to perform end-to-end conversational evaluations of our system by posing as patients and rating the system on several measures. We demonstrate Polaris performs on par with human nurses on aggregate across dimensions such as medical safety, clinical readiness, conversational quality, and bedside manner. Additionally, we conduct a challenging task-based evaluation of the individual specialist support agents, where we demonstrate our LLM agents significantly outperform a much larger general-purpose LLM (GPT-4) as well as from its own medium-size class (LLaMA-2 70B).

Like I explained in my recent deep dive of Hippocratic AI as an AI Startup,

This research and these pilots have allowed their team to develop Digital AI agents that can interact with patients on repetitive use cases such as:

Early Use Cases

AI agents for chronic care management

Post-discharge follow-up

Congestive heart failure management

Kidney disease management

Pre-operative outreach

One of the examples on YouTube is such a case:

Hippocratic AI recruited 1,100 nurses and 130 physicians to engage their >1 trillion parameter LLM for simulated patient actor conversations, often exceeding 20 minutes. As you can see in the Figure below, Polaris performance, rated by nurses, was as good or better for each of the 5 parameters accessed.

Why is this so special?

In addition, the system was trained on proprietary data including clinical care plans, healthcare regulatory documents, medical manuals, drug databases, and other high-quality medical reasoning documents.

Hippocratic AI is looking to enhance hospital staffing with AI agents that can take some of the burden off of burnt out nurses, assistant nurses and medical office assistants.

Polaris is thus an architecture that shows promise to build AI agents that can be impactful in real use cases of hospitals that can reduce hospital costs over time and enhance the quality of life of nursing staff.

Polaris, a novel constellation architecture with multiple specialized healthcare LLMs working in unison. The young AI startup found this architecture allowed for accurate medical reasoning, fact-checking, and the avoidance of hallucinations, while maintaining a natural conversation with patients. Safety is our North Star. We name our system after Polaris, a star in the northern circumpolar constellation of Ursa Minor, currently designated as the North Star.

Accurate Medical reasoning

Fact-checking

Avoidance of hallucinations

Natural conversation with patients

Safety-built architecture for LLMs to optimize patient-experiences

Human-Alignment of LLMs for AI Agents

So what impresses me the most about these AI agents is the human-alignment, focus on safety and real-world uses cases to reduce repetitive work.

Technically, Polaris comprises a primary conversational agent (70B - 100B parameters) supported by several specialist agents totaling over one trillion parameters for the constellation.

This primary agent is aligned to adopt a human-like conversational approach, exhibiting empathy and building rapport and trust.

The primary agent has also been trained to follow a care protocol (i.e., a checklist of tasks that must be completed) and to track its progress in completing the required tasks.

If nurses and patients like these AI agents, then they have a bright future to show up more often in a more digital friendly patience experience that is good for Hospitals and good for patients. I’m very keen to see more Healthcare related AI that improves the patience experience.

Specialist Agents in Healthcare Settings - “Empathy Inference”

Given how young of a startup Hippocratic AI is, the how pragmatic this research is really is a fascinating case of applied Generative AI in action.

The specialist agents are optimized for healthcare tasks. These include OTC toxicity detection, prescription adherence, lab reference range identification, and others shown in the figure above. The specialist agents “listen” to the conversation and guide the primary model if the discussion enters the specialist model’s domain.

The architecture of Polaris is very different than GPT-4, LLaMA, and other large language models because it consists of multiple domain-specific agents to support the primary agent that is trained for nurse-like conversations, with natural language processing patient speech recognition and a digital human avatar face communicating to the patient.

The support agents, with a range from 50 to 100 billion parameters, provide a knowledge resource for labs, medications, nutrition, electronic health records, checklist, privacy and compliance, hospital and payor policy, and need for bringing in a human-in-the-loop.

Empathy Inference in Digital Nursing

Hippocratic AI developed custom training protocols for conversational alignment using organic healthcare conversations and simulated conversations between patient actors and U.S. Licensed human nurses; the conversations were reviewed by U.S. licensed clinicians for our unique version of reinforcement learning with human feedback (RLHF).

So the fine-tuning here looks to be pretty impressive for a two year old AI startup.

Hippocratic AI, backed by General Catalyst and Andreessen Horowitz, recently raised an additional $53 million Series A for its generative AI medical tools. I think this is validation that they are on the right, while early, track.

What is "Empathy Inference?" See NVIDIA's, VP & GM healthcare Kimberly Powell Keynote at GTC talk about the Hippocratic AI's technology and partnership with NVIDIA.

This is essentially a real-time foundational Generative AI model for AI to patient interactions. The RLHF and technical feedback loops appear to be a very solid architecture here.

What is Polaris?

Polaris is the first safety-focused Large Language Model (LLM) constellation for real-time patient-AI healthcare conversations. I think it has enormous potential for real utility and Generative AI products in healthcare, among the best that I have seen so far in 2024.

Real-Time Long Multi-Turn Voice Conversations

Unlike prior LLM works in healthcare, which focus on tasks like question answering, this AI startup’s work specifically focuses on long multi-turn voice conversations.

Polaris is a one-trillion parameter constellation system that is composed of several multibillion parameter LLMs as co-operative agents: a stateful primary agent that focuses on driving an engaging patient-friendly conversation and several specialist support agents focused on healthcare tasks performed by nurses, social workers, and nutritionists to increase safety and reduce hallucinations.

They develop a sophisticated training protocol for iterative co-training of the agents that optimize for diverse objectives.

Nursing

Social Work

Nutrition

Other applications?

What is Empathy Inference in Specific Patient Interventions?

They align their models to speak like medical professionals, using organic healthcare conversations and simulated ones between patient actors and experienced care-management nurses.

This allows their system to express unique capabilities such as rapport building, trust building, empathy and bedside manner augmented with advanced medical reasoning.

This is how you should develop an AI startup, solve real-world uses cases grounded in safety that mimics and can enhance the burden of existing professionals like nurses. These are products that I can imagine could be revenue generating also fairly quickly since they address major problems in our healthcare settings like:

Staffing issues

Burnt-out Nurses

Nursing shortages

Reducing costs of medical assistants, nursing assistants, etc…

Automating repetitive work that leads to burnout

Improving patient experiences and trust & safety for patience

Augmenting existing nurses and nursing assistants in their work

Hippocratic AI developed an iterative training protocol as follows:

Instruction-tuning on a large collection of healthcare conversations and dialog data

Conversation tuning of the primary agent on simulated conversations between patient actors and registered nurses on clinical scripts that teach the primary agent how to establish trust, rapport and empathy with patients while following complex care protocols

Agent tuning to teach the primary agent and specialist agents to coordinate with each other and resolve any task ambiguity. This alignment is performed on synthetic conversations between patient actors and the primary agent aided by the specialist agents of the constellation.

Conversation and Agent tuning were performed iteratively with self-training the primary agent to allow generalization to diverse clinical scripts and conditions and mitigate exposure bias.

Finally, human nurses played the role of patients and talked to the primary agent guided by the support agents. The nurses provide fine-grained conversational feedback on multiple dimensions including safety, bedside manners and knowledge as well as providing re-writes for bad responses used for RLHF.

I suggest you read the paper to more carefully understand what they have done in just two short years as a Generative AI startup, which I believe might make them a huge winner in the field.

The paper is only 53 slides, but is a great example of an applied Generative AI architecture to solve real-world problems in healthcare and importantly, enhance the experience of the key constituents, namely both patients, nurses, staff and hospital management.

Partnering with Nvidia

Their chief science officer, Subhabrata (Subho) Mukherjee, with Jensen Huang. Obviously partnering with Nvidia gives them added credibility.

Summary of Polaris by the Subho

To give the key highlights:

1. Polaris is the first safety-focused LLM for healthcare geared for real-time patient-AI voice conversations.

2. Polaris is a 1T+ parameter constellation system composed of several 70B-100B parameter LLMs trained as co-operative agents: a stateful primary agent driving an engaging conversation and several specialist agents focused on tasks performed by nurses to increase safety and reduce hallucinations.

3. Polaris performs on par with U.S. licensed human nurses on aggregate across dimensions such as medical safety, clinical readiness, conversational quality, patient education, and bedside manner.

4. For the Phase 2 testing, we recruited 1100 U.S. licensed nurses and 130 U.S. licensed doctors for end-to-end conversational evaluation— the most extensive evaluation performed to date for any healthcare LLM. - Source.

Paper: https://lnkd.in/dcSgUDeY

Blog: https://lnkd.in/ditm6TsS

What Hippocratic AI does:

“We train our own model using text from care plans, regulations, medical manuals and textbooks and further teach it medical reasoning. We perform extensive alignment to teach the model how to speak like a chronic-care nurse using conversations between registered chronic-care nurses and patient actors. We conduct a unique Reinforcement Learning with Human Feedback process using healthcare professionals to train and evaluate the model on several fine-grained aspects including domain knowledge, conversational style and task completion. Given our safety-first focus, we formed the Physician Advisory Council comprising of expert physicians from leading US hospitals, health systems and digital health companies, who will play a crucial role in guiding the development of our technology and ensuring that it is ready for safe deployment.” - Subhabrata (Subho) Mukherjee