What is OpenAI's Foundry?

A glimpse into the rumors and supposed product that is already in Beta.

Welcome Back,

The cloning of ChatGPT has begun. OpenAI is quietly launching a new developer platform that lets customers run the company’s newer machine learning models, like GPT-3.5, on dedicated capacity. Companies like AnthropicAI have also begun to do this with the technology behind Claude, according to TechCrunch.

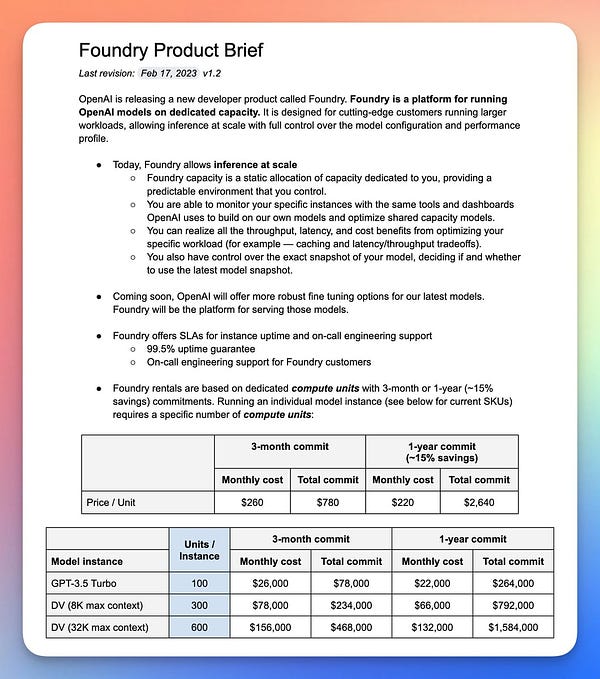

“[Foundry allows] inference at scale with full control over the model configuration and performance profile,” documents posted to Twitter state.

Companies like Snap, appear to be customers. My AI, appears to be entirely this ability. I’m assuming this is ChatGPT-3.5. That is the “the latest version of OpenAI's GPT technology.”

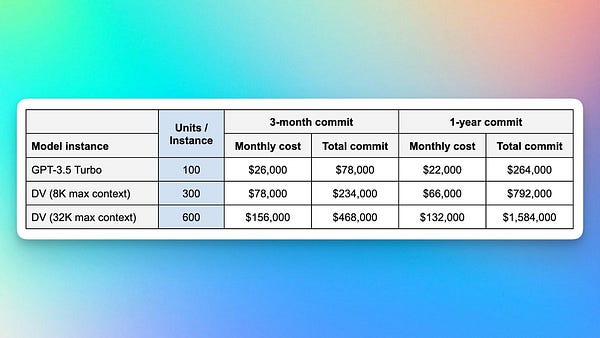

For many companies wanting to embed Generative A.I. directly within their product, the cost seems to be worth it. The service offers SLAs for 99.5 percent uptime and on-call engineering support. Running a lightweight version of GPT-3.5 will cost $26,000 a month for a three-month commitment or $264,000 over a one-year commitment.

For OpenAI this will be important AI as a Service revenue.

Much of this is based on screenshots from Twitter, so how knows how viable they are. In addition, Foundry will provide some level of version control, letting customers decide whether or not to upgrade to newer model releases, as well as “more robust” fine-tuning for OpenAI’s latest models.

OpenAI has yet to publicly announce its new developer product, Foundry. However, screenshots shared across social media reveal a company product brief updated on Feb. 17, confirming its launch.

This means ChatGPT could literally be everywhere in a few months and LLMs as they advance and get less costly will be bringing new experiences in apps, on platforms and to accomplish different tasks in 2023 and 2024 especially.

What Are OpenAI-Foundry Capabilities?

Other capabilities listed in the brief include:

A static allocation of capacity dedicated to the user and providing a predictable environment that can be controlled.

The ability to monitor specific instances and optimize shared capacity models with the same tools and dashboards used by Open AI.

The ability to realize all the throughput, latency and cost benefits from optimizing your workload, including caching and latency slash throughput tradeoffs.

Control over the exact snapshot of your model allowing users to decide whether to use the latest model snapshot.

The Decoder covered how the leak gives us glimpses into GPT-4.

The GPT-3.5 Turbo model presumably corresponds to ChatGPT‘s Turbo model, and the name DV could stand for Davinci, which is already the name of the largest variant of GPT-3 and GPT-3.5.

The Davinci models is where things get interesting: One with around 8,000 tokens of context – which is twice the length of ChatGPT – and one with a massive 32,000 tokens of context. If these numbers are confirmed, it would be a massive leap, so it could be GPT-4 or a direct predecessor.

The largest DV model would thus have eight times the context length of OpenAI’s current GPT models, and could likely process well over 30,000 words or 60 pages of context. - The Decoder

That’s a good insight.

While the document does not disclose where that compute will be hosted, it is likely on Microsoft Azure.

I think if OpenAI’s Foundry does well, Microsoft will attempt to acquire them completely. It would be valuable in Azure’s battle with AWS and Google Cloud, at any cost or price. They would just have to buy-out the other 51% of OpenAI. This is because at the current pace of innovation, others will nip at the heels of OpenAI in one way or another and that first-mover advantage might not be substantial but the Foundry list of customers could be valuable as a future stream of revenue.