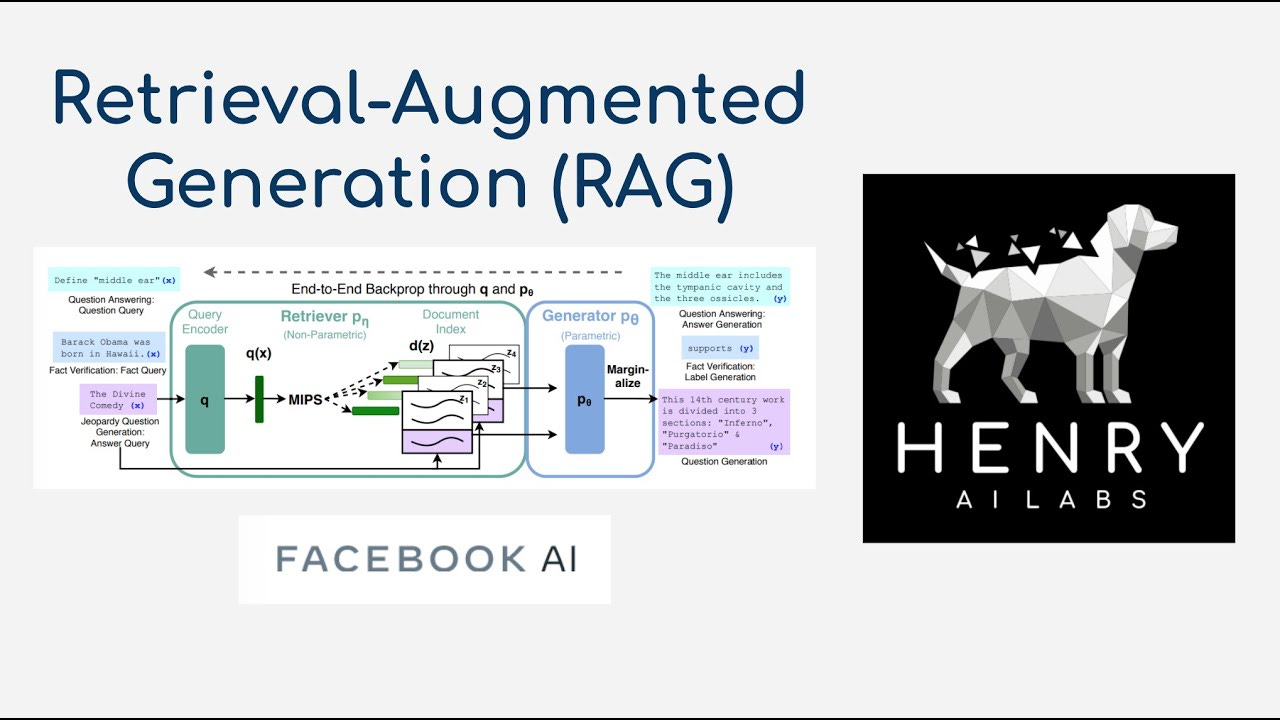

What is RAG: retrieval augmented generation (RAG)

RAG might help us optimize LLMs at scale. Thanks Meta!

It turns out RAG has a bright future, outside of Meta A.I. as well.

Hey Everyone,

When I was covering the launch from stealth of Startup Contextual A.I., I noticed that RAG seemed to be at the heart of how they chose to try to make foundational LLMs more trustworthy and safe for Enterprise customers.

Here is a YouTube tutorial on it:

While at Meta, Contextual A.I. CEO Kiela, led research into a technique called retrieval augmented generation (RAG).

RAG basically augments LLMs with external sources, like files and webpages, to improve their performance.

DeepMind: https://arxiv.org/pdf/2112.04426.pdf

HuggingFace: https://huggingface.co/blog/ray-rag

Feb, 2022 - DeepMind’s Retro, is a retrieval-enhanced autoregressive language model They use a chunked cross-attention module to incorporate the retrieved text, with time complexity linear in the amount of retrieved data.

In 2023, Contextual A.I. is claiming their models are built to be more customizable, scalable and trustable, while keeping your data safe.

This context awareness could become important as our enterprise data integrates with LLMs. RAG looks for data within the sources that might be relevant. Then, it packages the results with the original prompt and feeds it to an LLM, generating a “context-aware” response.

Read the announcement of RAG of HuggingFace back in 2020! See Tweet.

Where did Retrieval Augmented Generation (RAG) architecture come from?

Keep reading with a 7-day free trial

Subscribe to Artificial Intelligence Learning 🤖🧠🦾 to keep reading this post and get 7 days of free access to the full post archives.