Hey Everyone,

A paper from Meta titled "Toolformer: Language Models Can Teach Themselves to Use Tools" received a lot of attention during the last few days and there’s a reason it’s gotten some of us a bit excited.

The Toolformer methodology uses in-context learning techniques as its foundation to create complete datasets from scratch. Given a few manually written examples that show how to use a specific API, the LLM annotates a large language modeling dataset with probable API calls.

There were several interesting Tweets about it:

Toolformer is based on self-supervised text annotations, and it is more scalable than OpenAI's previous solution known as GSM8K.

In the area of how Generative A.I. tools can self-learn and call upon other tools I’m watching papers like these.

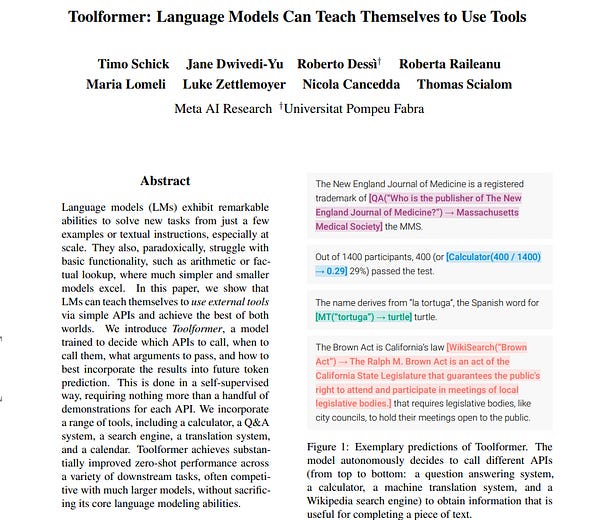

Language models (LMs) exhibit remarkable abilities to solve new tasks from just a few examples or textual instructions, especially at scale. They also, paradoxically, struggle with basic functionality, such as arithmetic or factual lookup, where much simpler and smaller models excel.

The paper introduces Toolformer, a model trained to decide which APIs to call, when to call them, what arguments to pass, and how to best incorporate the results into future token prediction. This is done in a self-supervised way, requiring nothing more than a handful of demonstrations for each API. They incorporate a range of tools, including a calculator, a Q\&A system, two different search engines, a translation system, and a calendar. Toolformer achieves substantially improved zero-shot performance across a variety of downstream tasks, often competitive with much larger models, without sacrificing its core language modeling abilities.

The model mastered the use of tools such as calculators, calendars, or Wikipedia search queries across many downstream tasks.

So the idea of the LLMs calling upon different tools for different tasks it’s pretty interesting. With the Toolformer approach, however, a language model can control a variety of tools and decide for itself which tool to use, when, and how, the researchers write.

Synced, the Decoder and many other publications that cover A.I. News I respect, wrote about this. While it’s easy to understand, what if this approach could scale? With Toolformer, an LLM can improve its abilities by calling APIs to external programs.

The topic trended on Machine Learning Reddit.

According to Wang, however, it appears that for the time being, Toolformer has only looked at problems that can be solved by "stateless" APIs, such as a calculator, Wikipedia searches, dictionary lookups, and so on. It does not support transactions that require explicit Dialog State Tracking, such as hotel reservations or e-commerce. In this case, LLM's "blurry" state representation could be problematic, he said.

While more sophisticated API engineering is required to allow the LLM brain to make better use of its newly acquired helping hands, LLMs enabled by external tools will undoubtedly revolutionize many real-world application domains.

The team of the paper is from Meta AI Research and the Universitat Pompeu Fabra.

The team summarizes Toolformer’s desiderata as follows:

The use of tools should be learned in a self-supervised way without requiring large amounts of human annotations. This is important not only because of the costs associated with such annotations, but also because what humans find useful may be different from what a model finds useful.

The LM should not lose any of its generality and should be able to decide for itself when and how to use which tool. In contrast to existing approaches, this enables a much more comprehensive use of tools that is not tied to specific tasks.

Keep reading with a 7-day free trial

Subscribe to Artificial Intelligence Learning 🤖🧠🦾 to keep reading this post and get 7 days of free access to the full post archives.