What is xAI's Grok 4 Fast?

An update from Elon Musk's corner of the Universe of AI.

Hey Everyone,

It’s the weekend and xAI has surprised us with something before Grok 5. So what is Grok 4 Fast?

Grok 4 Fast Specs TL;DR

2M context window - 47x cheaper than Grok 4

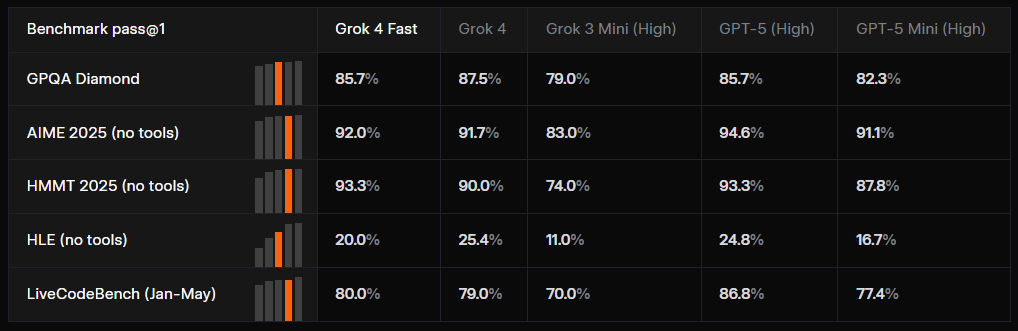

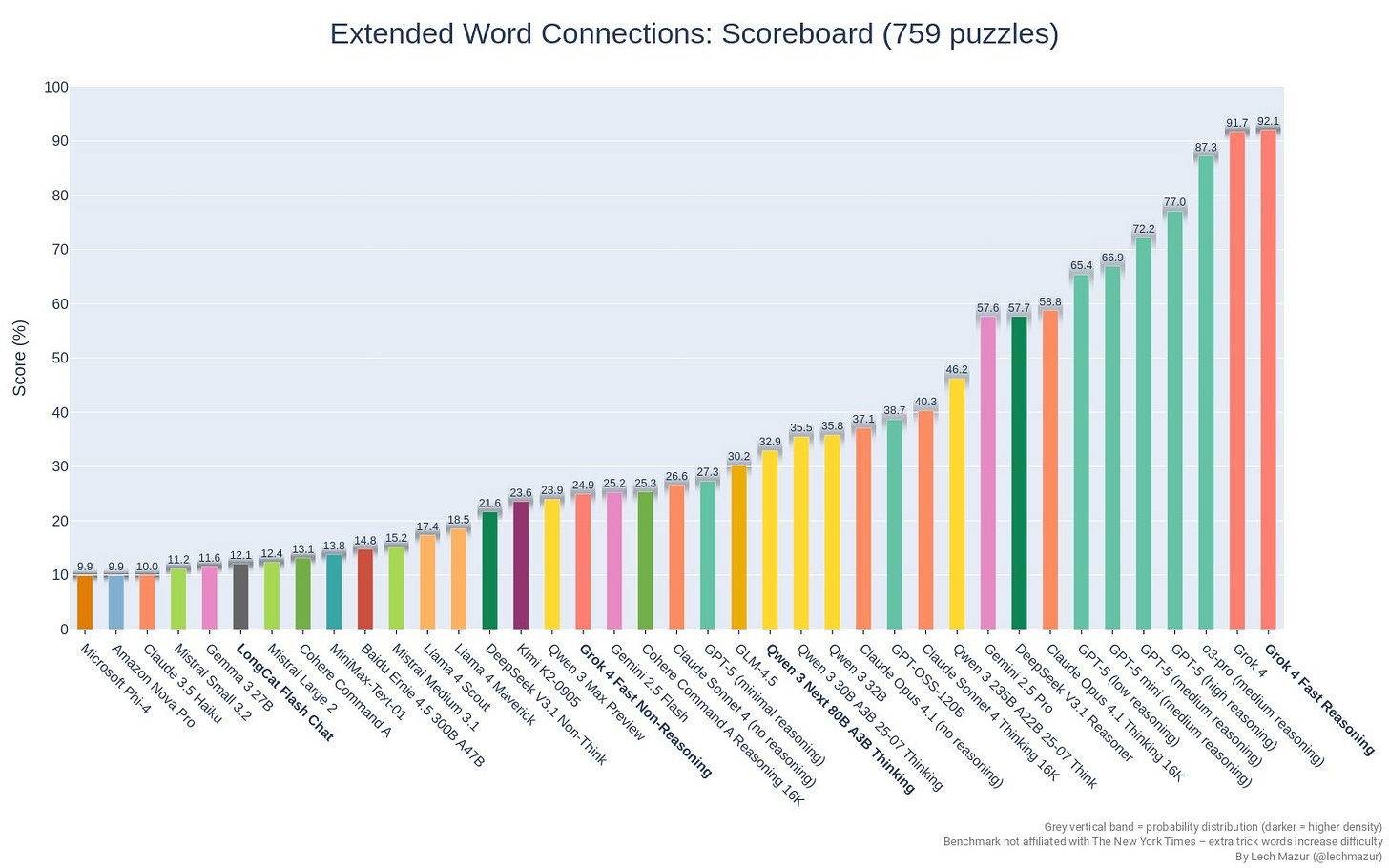

Beats GPT-5 mini (high) on multiple benchmarks

40% fewer thinking tokens on average - $0.2 Input, $0.5 Output for both reasoning and non-reasoning mode

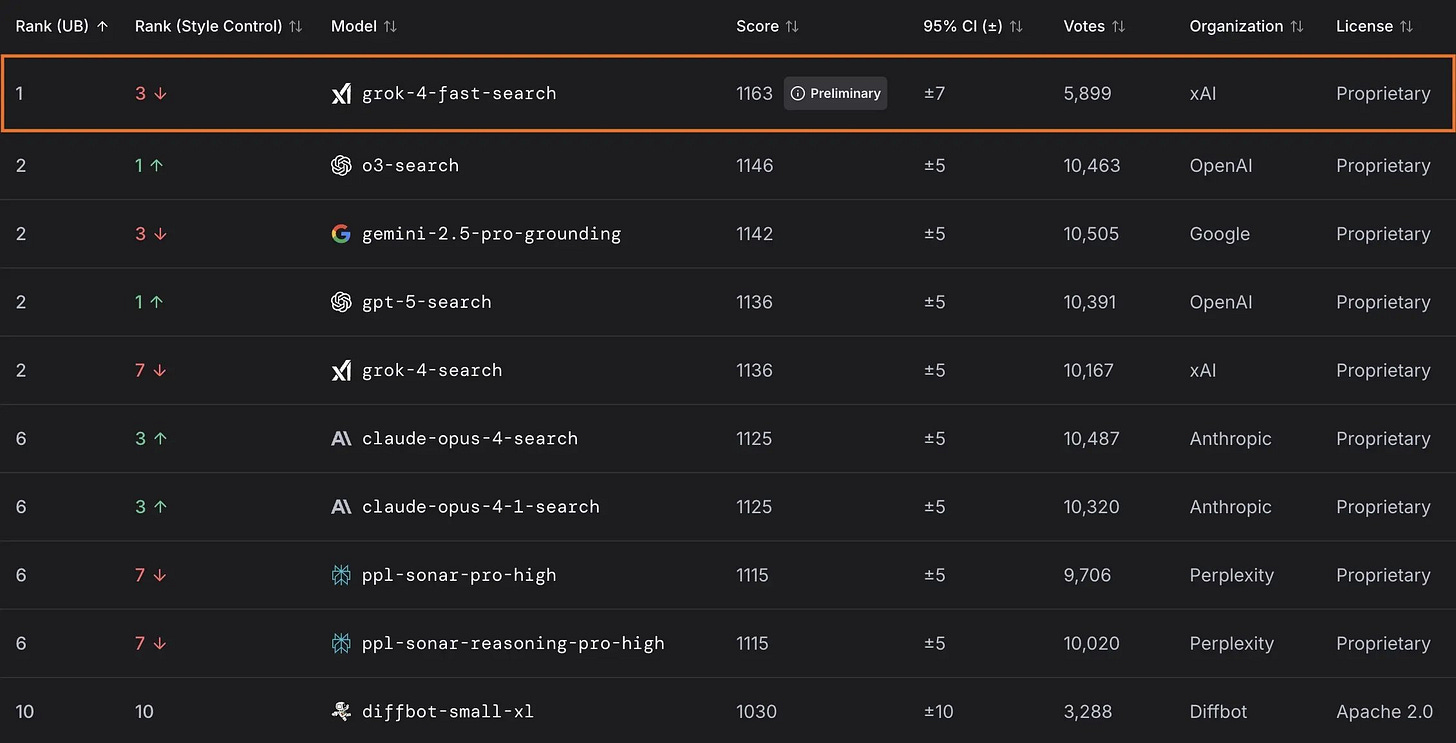

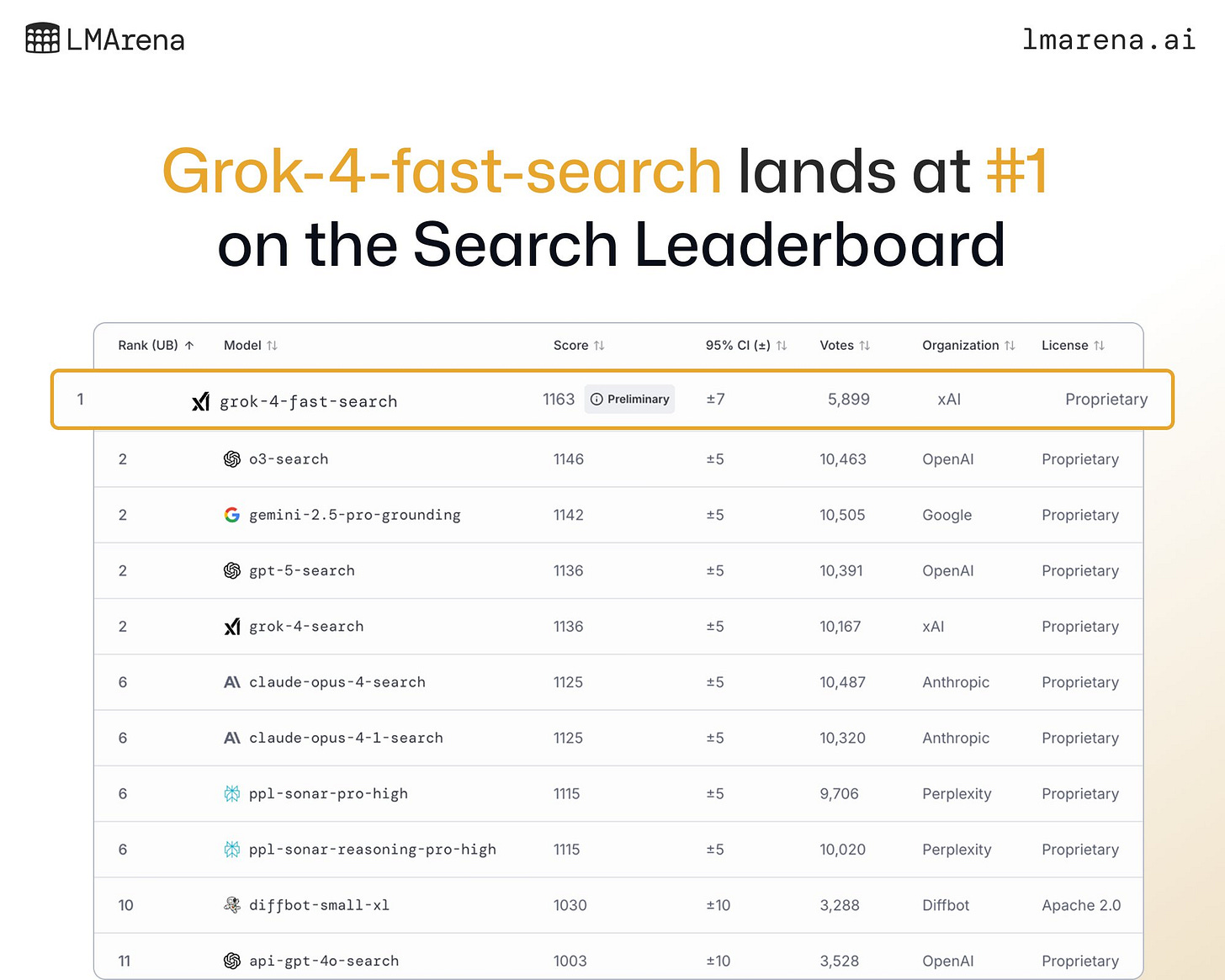

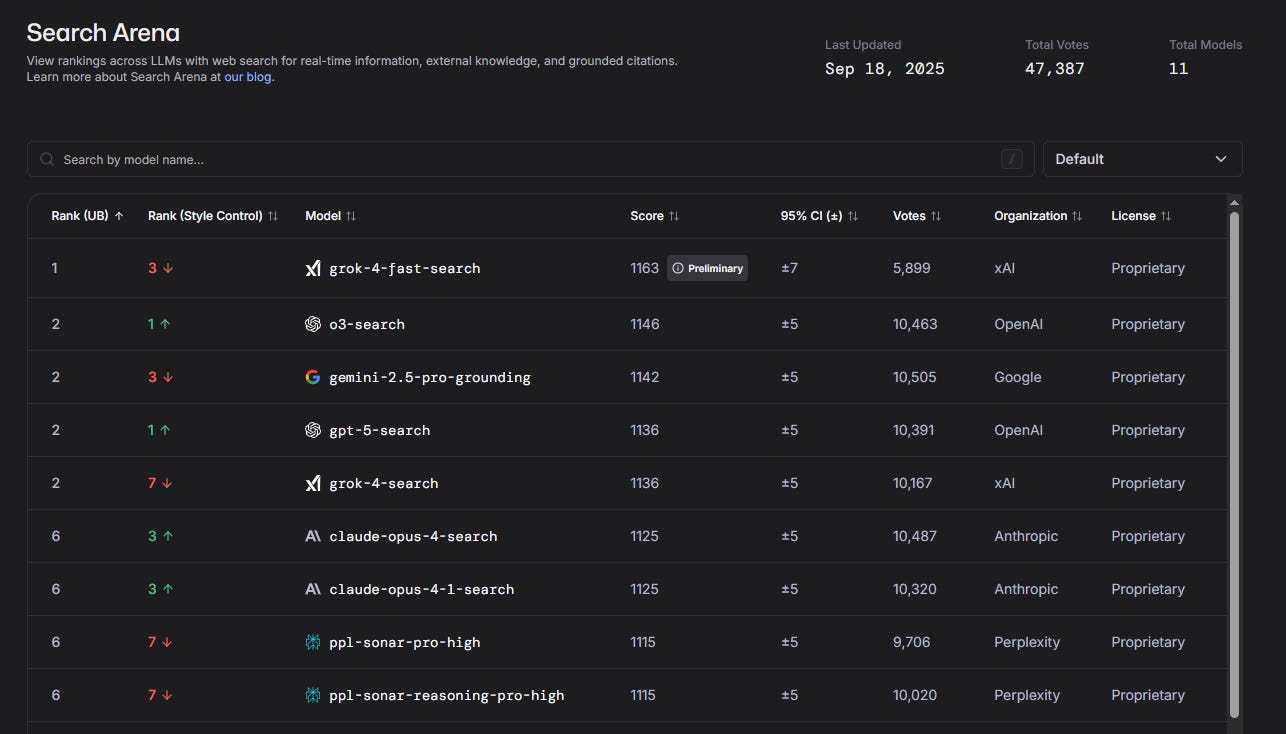

No. 1 on LMArena's Search Arena beats OpenAI o3-search - beats Grok 4 on AIME 2025 and HMMT 2025.

Sounds kinda of impressive?

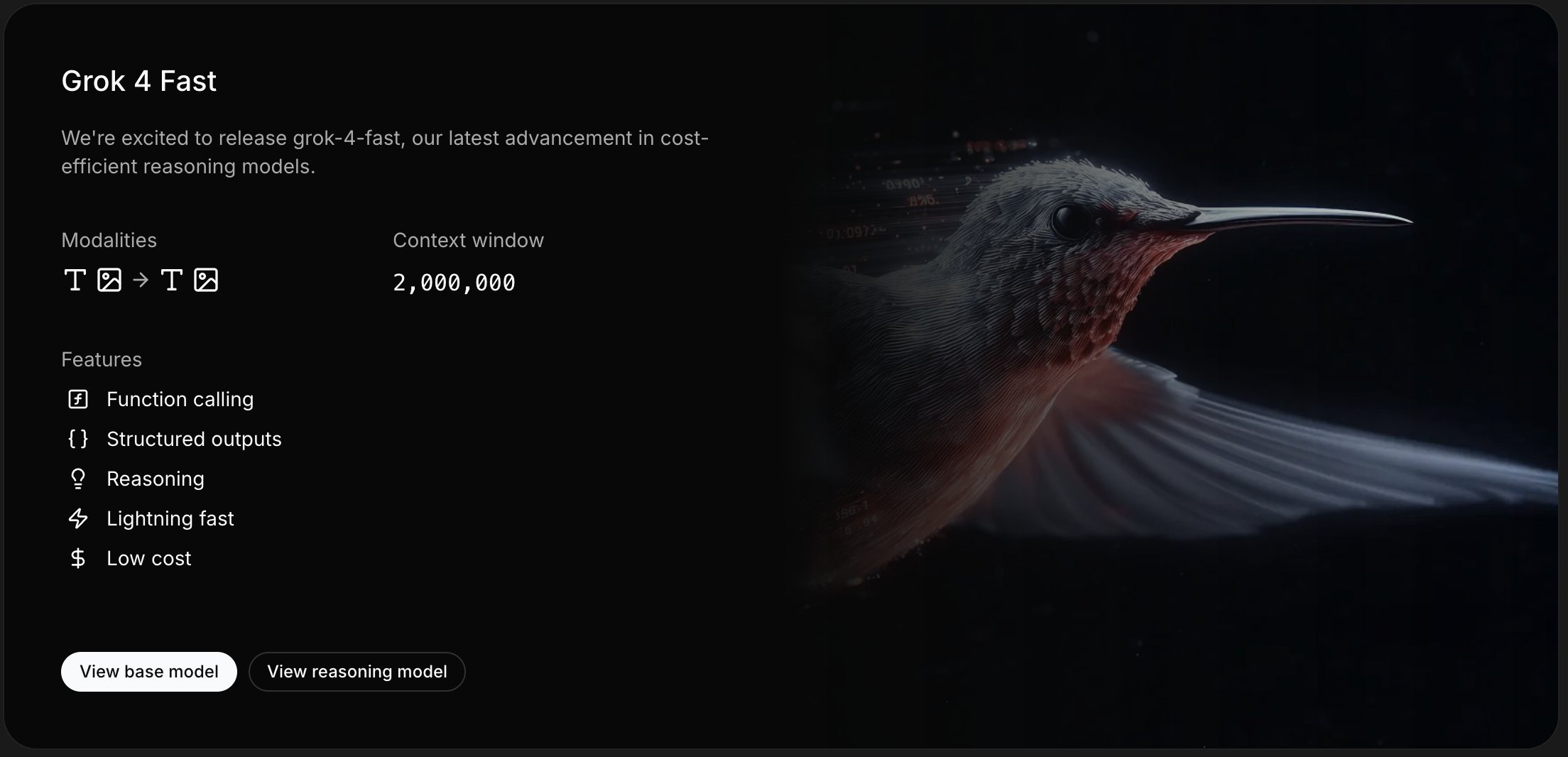

Grok 4 Fast is a cost-efficient AI model developed by xAI, designed to deliver high-speed responses while maintaining near-equivalent accuracy to its predecessor, Grok 4.

It’s optimized for both enterprise and consumer use, combining reasoning and non-reasoning capabilities in a single framework. Key features include:

Features of Grok 4 Fast Include:

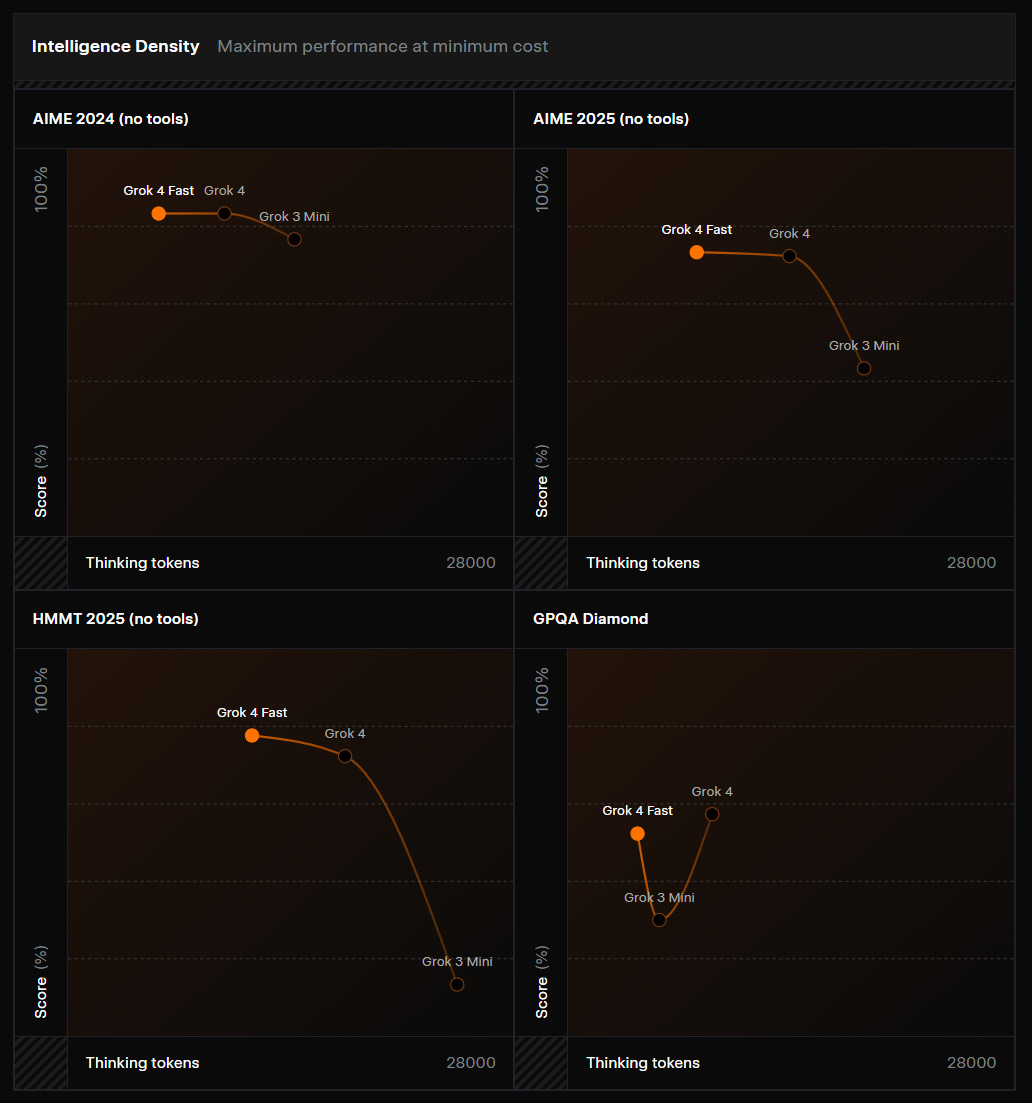

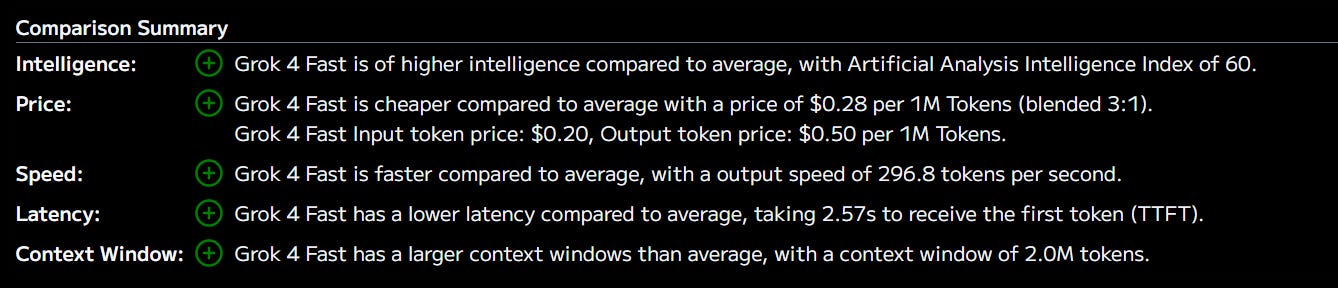

Speed and Efficiency: Uses 40% fewer thinking tokens than Grok 4, achieving up to 98% cost reduction while matching performance on benchmarks like GPQA Diamond (85.7%), AIME 2025 (92%), and HMMT 2025 (93.3%). It offers rapid responses, reportedly up to 10x faster than Grok 4, with low latency (2.55s time to first token) and high output speed (342.3 tokens per second).

Large Context Window: Supports a 2 million token context window, enabling it to handle large and complex inputs effectively.

Multimodal Capabilities: Includes improved coding reliability, image understanding, and voice features, making it suitable for tasks like quick code suggestions, real-time Q&A, and drafting.

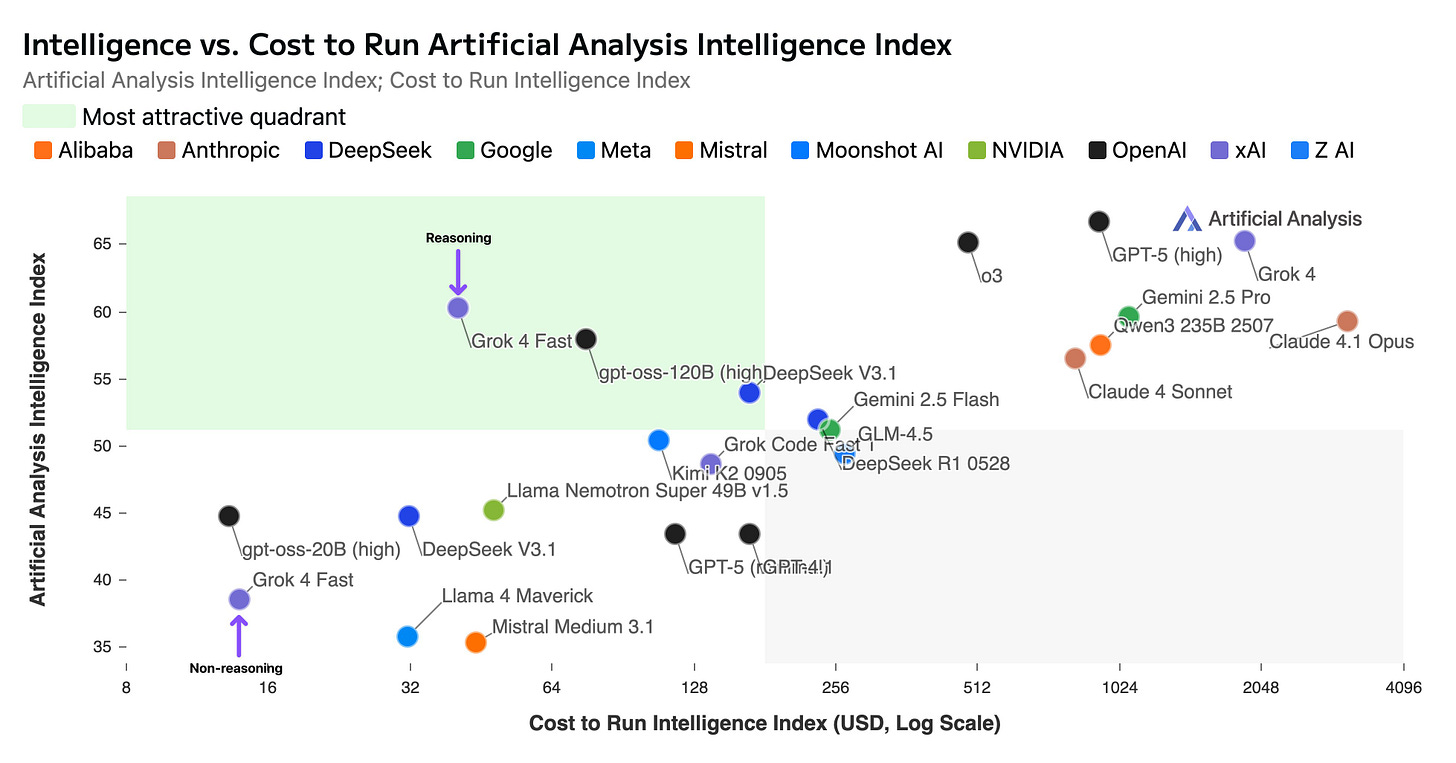

Cost-Effectiveness: Priced at $0.20 per million input tokens and $0.50 per million output tokens, it’s significantly cheaper than many competitors, as verified by Artificial Analysis.

Availability: Accessible on grok.com, xAI’s iOS and Android apps, OpenRouter, Vercel AI Gateway, and the xAI API. It’s offered in early access beta, with free access on some platforms for a limited time, and is available to SuperGrok, X Premium, and Premium+ users.

The Sleuths on Reddit and Testing Catalog noticed this on September 14th, nearly a week ago.

While turnover appears high at xAI, their AI Infrastructure does seem to enable them to move quickly: (Elon Musk has been focusing on xAI since he left his position in the Trump Administration DOGE team).

What are people on Reddit saying?

xAI claims that Grok 4 Fast exhibits state-of-the-art (SOTA) price-to-intelligence ratio ideal for its “Deep Search” function.

It’s a bit like some of the efficiency gains we are seeing out out of Alibaba Qwen:

“Grok 4 Fast achieves comparable performance to Grok 4 on benchmarks while using 40% fewer thinking tokens on average.”

This is what Grok itself said about Grok 4 Fast:

“Grok 4 Fast prioritizes speed for straightforward queries while retaining strong reasoning for tasks like math, logical analysis, and coding, making it ideal for developers, analysts, and knowledge workers needing fast, reliable outputs. However, it may sacrifice depth for complex reasoning compared to larger models.”

A lot of LLM labs are going after science, white collar workers and knowledge workers as a whole.

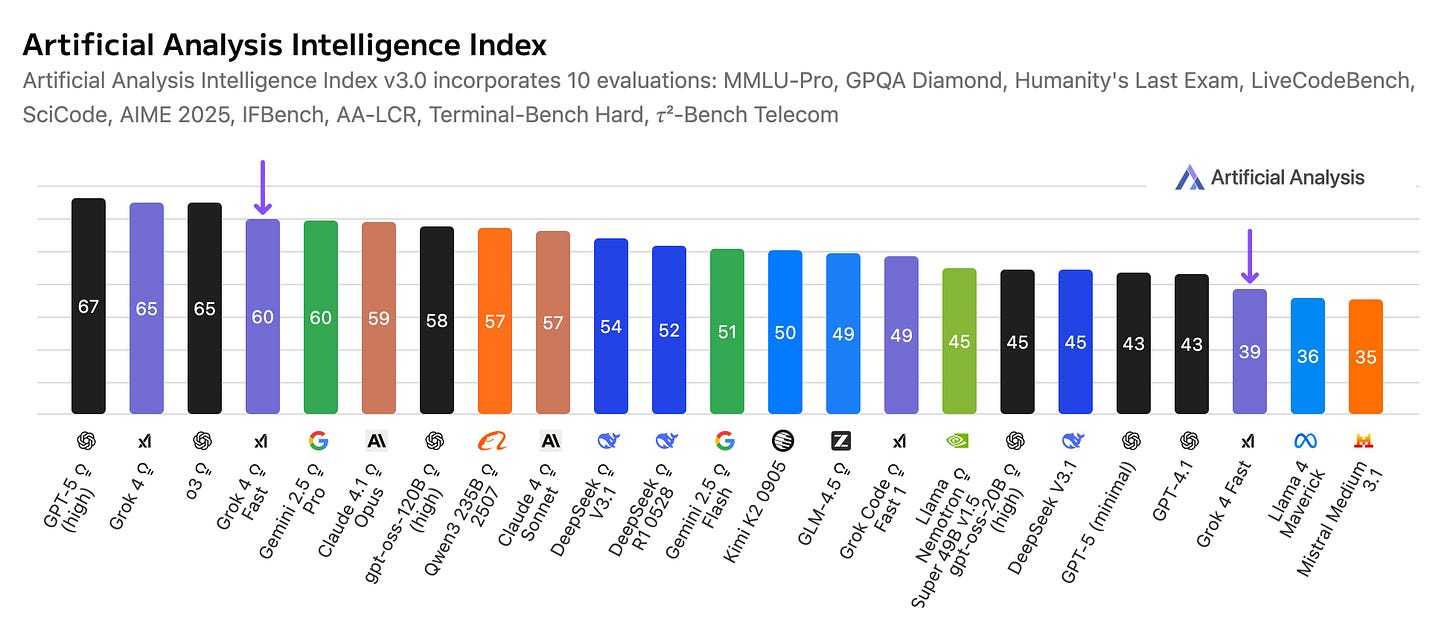

How Good is it?

It’s price to efficiency and speed is appealing:

Read what Artificial Analysis had to say.

What else to know?

Grok 4 Fast is in early Beta but has some uses that could boost both xAI’s API and consumer adoption in terms of quick-search functionality. I’m actually a fan of Grok’s Voice Mode personally that alone makes its app download worthy.

Benchmark Performance: Excels in specialized evaluations, scoring 85.7% on GPQA Diamond for graduate-level science questions, 92% on AIME 2025 math competition, and 93.3% on HMMT 2025 for advanced math and team problems, demonstrating robust problem-solving abilities.

Token Efficiency: Reduces computational overhead by 40% through optimized "thinking" processes, allowing for faster inference without proportional increases in hardware demands, ideal for scalable deployments.

Context Handling: Its 2 million token window supports processing entire codebases, lengthy documents, or extended conversations in one go, minimizing the need for chunking or summarization.

Multimodal Integration: Seamlessly handles text, images, and voice inputs; for example, it can analyze uploaded diagrams for engineering queries or transcribe and respond to spoken prompts in real-time.

Coding Enhancements: Provides reliable code generation, debugging, and optimization suggestions across languages like Python, JavaScript, and Rust, with a focus on concise, executable outputs for rapid prototyping.

Voice Mode: Exclusive to Grok iOS and Android apps, enabling hands-free interactions with natural-sounding speech synthesis, perfect for on-the-go users like drivers or multitaskers.

Enterprise Applications: Tailored for business workflows, including automated report generation, data analysis, and customer support bots, with API integrations for tools like Slack, Jira, and CRM systems.

Consumer-Friendly Features: Delivers quick, witty responses for casual queries, such as recipe adaptations or travel planning, while maintaining Grok's signature humor and helpfulness.

Sustainability Focus: Lower token usage contributes to reduced energy consumption per query compared to larger models, aligning with xAI's goals for efficient AI development.

Future Roadmap: As an early beta, it paves the way for upcoming enhancements like real-time collaboration tools and deeper integrations with xAI's ecosystem, with community feedback driving iterations.

Grok 4 Fast looks like a decent research option.

It appears to push the LLM space further along much in the way Google and Qwen have done in 2025, that offsets how underwhelming GPT-5 of OpenAI has been. Although it’s not specialized like Anthropic in AI coding, Grok 4 Fast feels more like a B2C than a B2B relevant model.

Grok app downloads have improved in 2025, but nothing incredible at around 10 million total app downloads as of August. Relative to the cash burn and LLM capabilities, xAI still has some work to do to be a serious player.

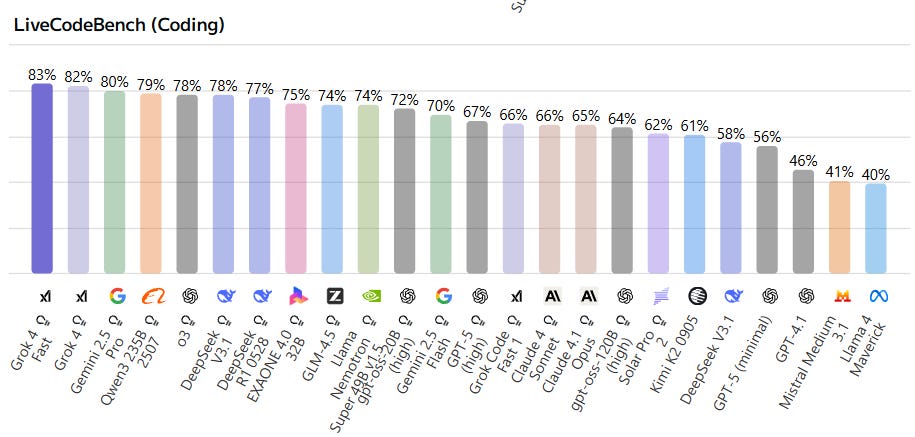

Grok is getting better at coding

How many Users does Grok have?

As of August, Grok had in the area of 30 million MAUs.

Based on the latest data from Semrush. This is not great considering their huge AI Infrastructure efforts. Marketing, brand and product-marketing will need to improve drastically.

Following the release of Grok 4 in July 2025, Grok added 9 million monthly users.

The release of Grok 5 later in 2025, could produce an even bigger up-tick.

While I don’t consider Grok 4 Fast a major upgrade, it’s a very useful model and makes Grok much more appealing. I mostly use it for Deep Search myself, instead of Gemini, Claude and ChatGPT for the most part. Instead of even Perplexity and AI Overviews sometimes. But that’s just me.

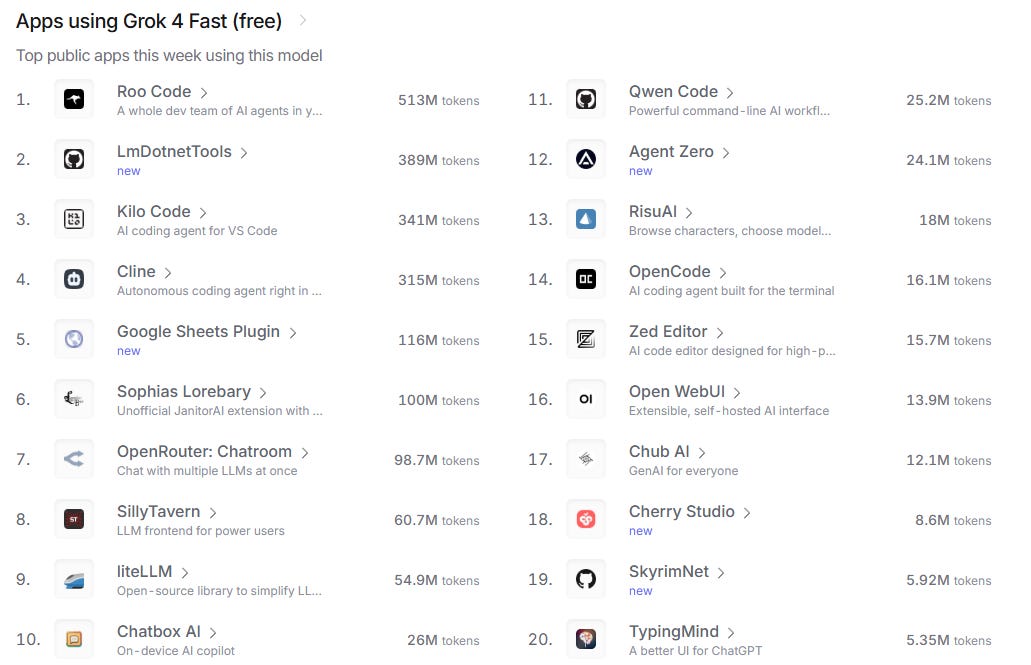

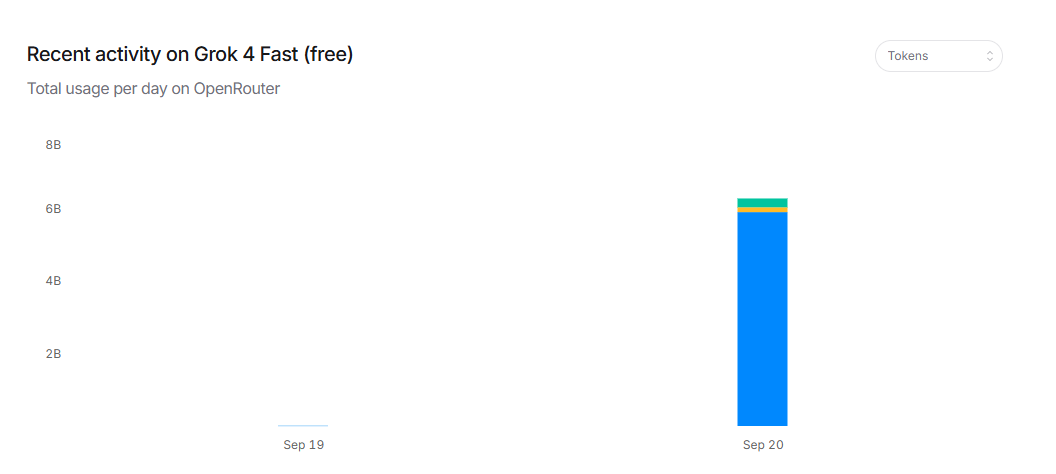

The API should gain more marketshare with Grok 4 Fast though, and more developers will be using it due to its cost to performance ratio now:

September 20th, 2025 was a nice debut:

Roughly same performance as Gemini 2.5 Pro but 25x cheaper.

The Grok app has also been downloaded over 50 million times on the Google Play Store.

Is it the Fastest Reasoning Model yet?

Is this a breakthrough model for AI Search?

It’s fun to think about.

But it’s Blazing Fast at Coding Too

xAI have really improved in AI coding in 2025 relative to others, if we are to believe their benchmark performance. (Claude is generally considered the leader).

Grok's integration with Telegram in April 2025 also played a role in its increasing popularity and user activity.

Grok is growing fast in India, where Gemini and ChatGPT have seen incredible success in the last 18 months in terms of adoption.

Thanks for the insight Michael. I’ll be testing out the API. I’ve been working on a voice agent and heard great things about grok voice so this gives me the push to test it out.