Why did Geoffrey Hinton Leave Google?

His warnings about A.I. Risk ring true. Remind me of Stephen Hawking.

Hey Everyone,

Something is going a bit sideways in A.I. innovation. Geoffrey Hinton who won the ‘Nobel Prize of computing’ for his trailblazing work on neural networks is now free to speak about the risks of AI. He’s decided to leave Google, and he doesn’t sound very happy about what’s going on.

My earliest introduction to A.I. risk was Stephen Hawking. I considering him smart but perhaps exaggerating a little bit. I think I may have been wrong.

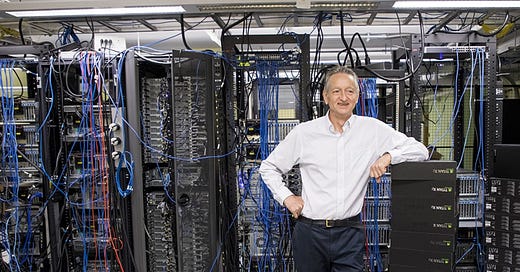

Geoffrey Hinton, a VP and engineering fellow at Google and a pioneer of deep learning who developed some of the most important techniques at the heart of modern AI, is leaving the company after 10 years, the New York Times reported today.

According to the Times, Hinton says he has new fears about the technology he helped usher in and wants to speak openly about them, and that a part of him now regrets his life’s work.

Over ten years ago, back in 2012, Dr. Hinton and two of his graduate students at the University of Toronto created technology that became the intellectual foundation for the A.I. systems that the tech industry’s biggest companies believe is a key to their future, said the NYT.

I was a bit shocked to hear this story:

“I console myself with the normal excuse: If I hadn’t done it, somebody else would have,” said Hinton, who had been employed by Google for more than a decade. “It is hard to see how you can prevent the bad actors from using it for bad things.”

I wonder if Jack Shanahan feels the same way with his work with Google on Project Maven. In retrospect that may have been a turning point in how China approached A.I. in warfare in its bid to copy and surpass the West. But now we are at a different stage in how Corporate leaders collaborate with National Security interests. As private and Government interests intersect, the U.S. became a bit more like China.

Dr. Hinton said he has quit his job at Google, where he has worked for more than decade and became one of the most respected voices in the field, so he can freely speak out about the risks of A.I. I’m all ears.

The life-long academic joined Google after it acquired a company started by Hinton and two of his students, one of whom went on to become chief scientist at OpenAI. Clearly whatever OpenAI becomes will be part of Hinton’s legacy, in a manner of speaking.

The 75-year-old computer scientist has divided his time between the University of Toronto and Google since 2013, when the tech giant acquired Hinton’s AI startup DNNresearch.

It pains me to see a senior A.I. pioneer have to talk like this:

This is NOT Good.

The spread of misinformation is only Hinton’s immediate concern. On a longer timeline he’s worried that AI will eliminate rote jobs, and possibly humanity itself as AI begins to write and run its own code. This interests me because of late that’s been my writing, during the last few days.

The implications worry me too, and I’m not trying to be a grifter or do fear-mongering. I typically stay away from the maniac doomers as far away as I can. I consider reading LessWrong articles as poor for my mental health, and a bit like reading conspiracy forums. I don’t look up to doomers, no matter how “rational” their sheep’s clothing.

But as for the top scientists, we have to give them some benefit of the doubt. Maybe the next quote of his is the kicker:

“The idea that this stuff could actually get smarter than people — a few people believed that,” said Hinton to the NYT. “But most people thought it was way off. And I thought it was way off. I thought it was 30 to 50 years or even longer away. Obviously, I no longer think that.” - Hinton, mid 2023

Keep reading with a 7-day free trial

Subscribe to Artificial Intelligence Learning 🤖🧠🦾 to keep reading this post and get 7 days of free access to the full post archives.