Why Microsoft's Phi-4 going Open Source is Important

How will small language models evolve in 2025?

Hey Everyone,

While Microsoft’s contribution to Generatie AI has faded badly as we head into 2025, especially relative to Google, Anthropic, OpenAI and even Meta, phi-4 is still somewhat interesting.

Microsoft’s Phi models have been among the best small language models (SMLs) in the world.

phi-4is a state-of-the-art open model built upon a blend of synthetic datasets, data from filtered public domain websites, and acquired academic books and Q&A datasets. The goal of this approach was to ensure that small capable models were trained with data focused on high quality and advanced reasoning.phi-4underwent a rigorous enhancement and alignment process, incorporating both supervised fine-tuning and direct preference optimization to ensure precise instruction adherence and robust safety measures. - Hugging Face

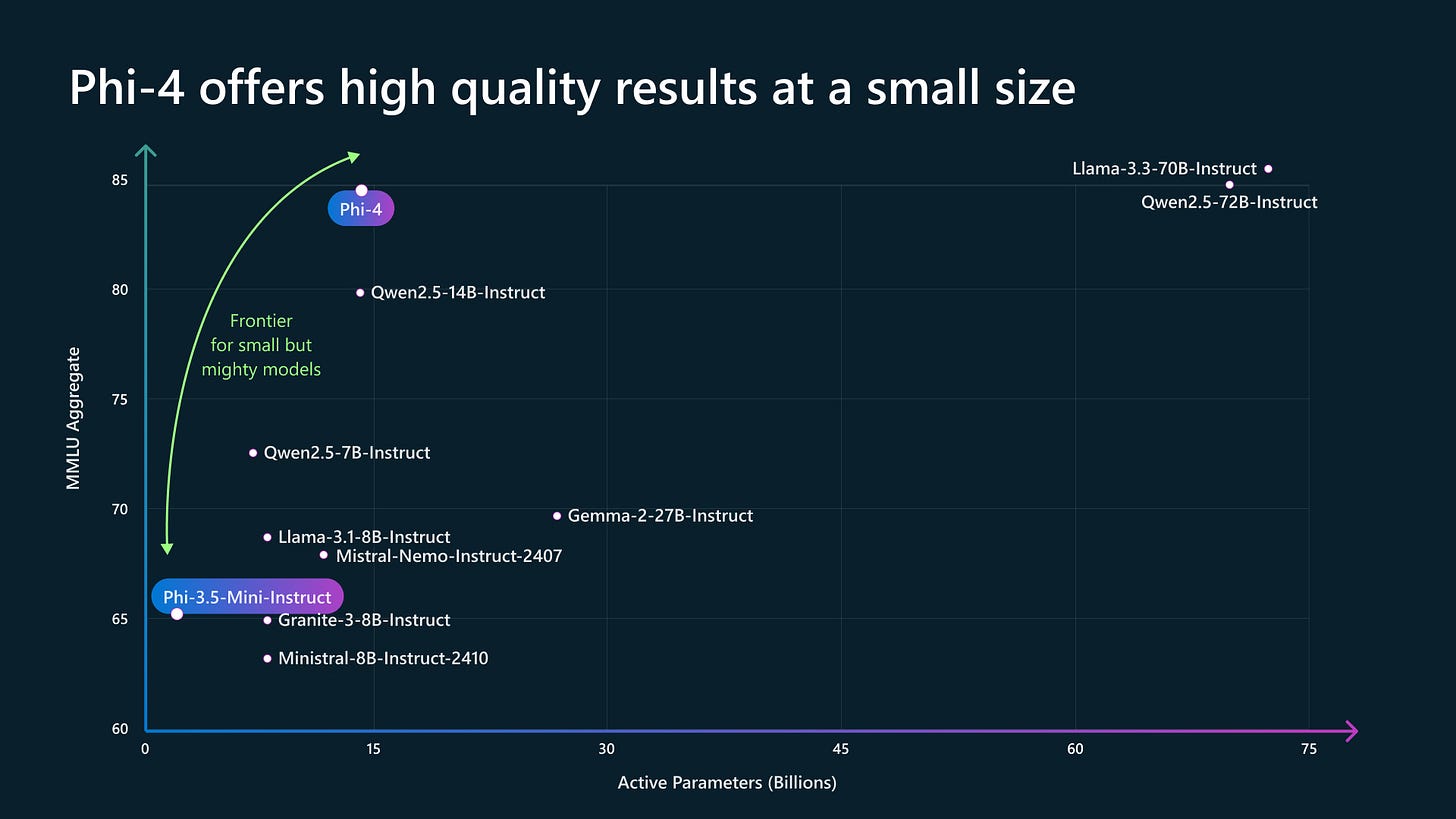

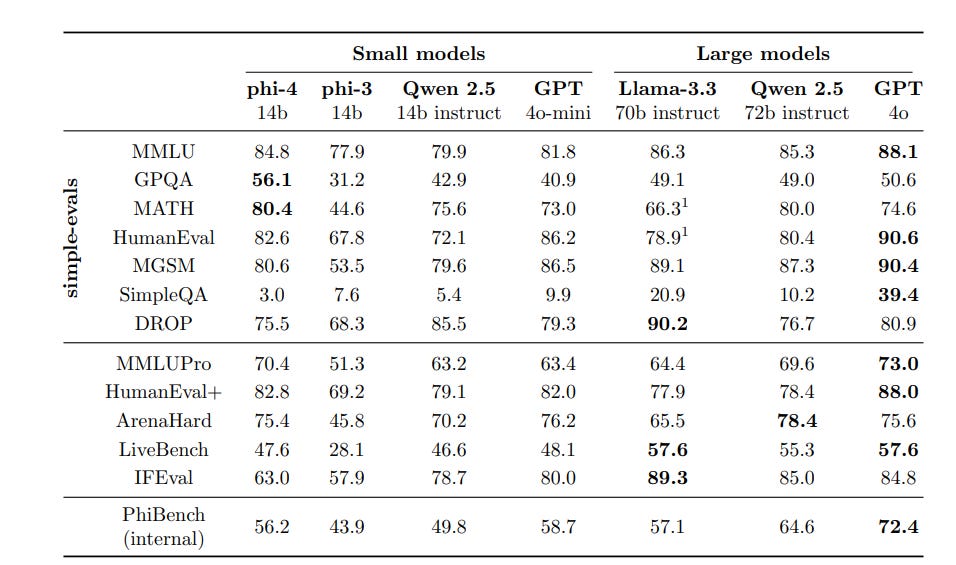

So by finally making it free on Hugging Face (open-weight, not really open-source) it does help the global ecosystem. Phi-4 is a tiny model but outperforms Llama 3.3 70B (nearly five times bigger) and OpenAI’s GPT-4o Mini on several benchmarks. In math competition questions, Phi-4 outperformed Gemini 1.5 Pro and OpenAI’s GPT-4o.

While Microsoft Research is a capable research lab, Microsoft has struggled to make good AI products. A lot of their customers feel forced to use their Copilots even though they aren’t very good and most use OpenAI’s products instead.

Phi-4 is said to excel at complex reasoning capabilities. The model can also be found on Lightning AI.

Phi-4 has a unique ability to demonstrate the performance of SMLs.

For companies like Apple and OpenAI small language models might be important as we can anticipate a new era of AI native devices. Benchmark performance is also surprisingly good.

Read Sebastian Raschka, PhD’s insights on this LinkedIn post.

The paper is dated December 12th, 2024.

It’s not clear to me how Microsoft is actually using Phi-4 in its products.

What is for me though is a testament of synthetic data! If we are to believe Elon Musk and many other pundits that Peak Data was already passed in 2024. For Phi-4, Microsoft has not experimented with inference optimisation, and the focus is mainly on synthetic data.

phi-4 is that the training data consisted of 40% synthetic data. And the researchers observed that while synthetic data is generally beneficial, models trained exclusively on synthetic data performed poorly on knowledge-based benchmarks. - Sebastian Raschka

Meta is also working on more advanced techniques to reduce hallucinations. In a new paper, researchers at Meta AI propose “scalable memory layers,” which could be one of several possible solutions to some of the memory and hallucination problems.

According to Meta’s researchers, Scalable memory layers add more parameters to LLMs to increase their learning capacity without requiring additional compute resources.

Read Seb’s Overview of Great AI Papers of 2024

It seems to me phi-4 was a bit late to offer an MIT license. But this should democratize SLM research and opportunities, especially in China. Phi-4’s predecessor, Phi-3.5, was also made available for free on Hugging Face, well, eventually! Microsoft is nowhere in the same league as Meta though in its contribution to the open-weight LLM community.

Still, Phi-4 demonstrates that smaller, well-designed models can achieve comparable or even superior results compared to larger models. What will that mean for on device applications? Phi-4 also supports ten Indian languages since Microsoft is trying to cozy up to Indian developers and ML folk especially.

Although Phi-4 was actually revealed by Microsoft last month, its usage was initially restricted to Microsoft’s new Azure AI Foundry development platform. This was a bit peculiar. It’s not always clear how Microsoft Research and their work works with Microsoft’s bigger AI ecosystem and AI products.

Since its launch, Phi-4 has garnered attention for its capabilities and has been featured in various tutorials and demonstrations, showcasing its potential in real-world applications. For instance, a recent tutorial on DataCamp illustrates how to build a homework checker using Phi-4, highlighting its practical utility in educational contexts. Seems to be mostly empty PR to me, but I still have big expectations for SMLs in 2025 overall.

Microsoft pledged $3 Billion to India recently in Satya’s recent tour. Basically BigTech will be building datacenters in Asia and training a cheaper future workforce there in AI. Using terms like key investments in “Cloud and AI infrastructure and AI upskilling”. That means of course more layoffs in the West eventually so these people can afford to keep building their datacenters that cost billions.

phi-4 is still an amazing Model and the phi models are perhaps one of Microsoft AI’s best contributions to the Generative AI ecosystem. Instead of relying on OpenAI’s models it’s a pity they have already been left behind. Meanwhile export controls on AI chips have only made Chinese LLM makers more hungry and capable. At least Microsoft will own a not so insignificant share in OpenAI when they eventually go public likely around the year 2027-28.